When major natural disasters hit, communication infrastructures are subject to be disrupted or destroyed as a whole. Enabling communications in disaster scenarios is a key element to allow for people to communicate with their families and for first responders, private and government organizations to assess the situation, document damages, and request the necessary assistance. Remote areas of the world are more subject to face major disruptions of their communications in face of a major natural disaster, taking days or weeks to re-establish anything that resembles network connectivity. For example, with hurricane Maria in 2017, there were parts of Puerto Rico completely isolated, not only from communications but any type of access.

Emergency response network systems have been adapting to this type of scenarios, e.g., they have created mobile vehicles (i.e., trucks) with a wide range of connectivity options, including satellite connectivity. However, those take days or even weeks to get to destination and set a basic network. It is in these remote locations that the use of disruption (and delay) tolerant networking (DTN) can be most valuable to quickly enable an emergency response network. DTN was originally designed for outer space networking where disruptions and long delays are present, but it can also be beneficial in terrestrial use cases where connectivity is limited.

Delay and Disruption Tolerant Networking (DTN) uses store-and-forward mechanisms to deliver data without the need of continuous connectivity between the initial and final destination. Instead, packets are delivered to the next hop, which stores the information until it can be forwarded again, repeating this process until reaching the final node. The main interworking layer for DTN is the Bundle Protocol (BP).

In disaster situations, DTN can be used by first responders to quickly setup a mobile network allowing communications between the users (without Internet connectivity) and intermediary mobile nodes, or data mules, that can step-by-step deliver information to an area that has access to the internet and can answer to the reported data. Links can use point-to-point technologies (i.e., Bluetooth, Wi-Fi), so that data can be routed when satellite and internet connectivity is not available. This way, users with e.g., mobile phones can report their status and communicate with emergency responders through this disruption tolerant network.

Classifying the Scenario

We determine that a major disaster scenario can leverage DTN capabilities to provide a communications solution when:

- Internet communications are compromised (e.g., wired and wireless connectivity is lost).

- Communications cannot be restored within hours. This is typical of remote locations or locations not well served by a multitude of options.

Spatiam Corporation's Architecture for DTN-Enabled Emergency Response Network

We have created an experimental communication network that allows users without mobile and/or Internet access to communicate with Internet-connected users (and Crisis Information Management Systems) via a DTN-enabled rover/drone (I.e., the Data Mule).

DTN-based emergency response communications network.

In this network:

- The Mobile Device is the interface with the end-user in the disaster area. It will collect all necessary data (e.g., text, pictures, videos, voice clips) to communicate to other users, and the emergency response team. Communications occur between the users' mobile devices and the Mule using both traditional internet technologies and with the bundle protocol (for mobile devices implementing the DTN-based application). Mobile devices implementing the DTN-based application will also be able to communicate among each other via an ad-hoc network (each mobile phone becomes a data mule on itself).

- The Data Mule communicates with both mobile phones and with the DTN Gateway. The main purpose of the Mule is to physically transfer the data between the users in the disaster area and the Gateway connected to the Internet, allowing the users' data to reach its destination (other users, Crisis Information Management Systems - CIMS, etc.). It is assumed the mule will be installed over a physical transportation system that is able to overcome the physical barriers of the disaster (motorbike, drone, rover, etc.). A drone is of particular interest as altitude is a contributing factor for providing (radio) coverage to more users.

- The DTN Gateway is the interface that connects the mule (with its DTN protocols) to the Internet. The Gateway will incorporate the necessary interfaces (protocols and APIs) to connect to key systems such as e-mail and CIMS. The physical connectivity between the mule and the DTN Gateway can consist of a wired connection (e.g., Ethernet or USB) and a wireless connection (Wi-Fi or cellular).

Experimental Setup

We used a Mobile Phone (both Android and iPhone) as the end-user device, a Raspberry Pi 4 as the Mule processing unit and a laptop emulating the Crisis Management System. We used the NASA/JPL DTN implementation, ION (Interplanetary Overlay Network), as the Bundle Protocol stack.

The figure below illustrates the main components and the software (and protocol) stack.

Main components of the experimental network and software/protocol stack

We began our setup with a Raspberry Pi loaded with our code, a GPS module, network card, long-range Wi-Fi antenna, and USB camera.

Raspberry Pi with GPS and Wi-Fi module

The hardware package is attached to the mule and provides an interface for end users to connect to (through a captive portal), and DTN gateway services for communications and data relay. The mule goes (or flies) to an affected area, creating a Wi-Fi network that users without Internet connectivity can connect to and send distress messages. Through the captive portal users will also have the option to download a DTN android app that provides full communication features and accessibility.

- Mobile phones will detect the Wi-Fi signal and trigger the captive portal (similar to the experience of going to your coffee shop of choice).

- Once user accepts, our Captive Portal provides information to the user (note the contents of the captive portal can be customized on the Mule for the specific situation), allowing the user to identify themselves and send a message. In addition, users can also download a native DTN app (not available at this point), to extend the capabilities to send pictures, files and have ad-hoc communications (between Mobile Phones).

- When a user message is received at the Mule, an onboard camera takes a picture of the landscape, logs the GPS coordinates, and continues its pattern. The landscape image, user message, and GPS coordinates are securely packaged as a .zip file into the filesystem together with any other messages that it has received.

- Once the mule has finished its route and returns to the station, it awaits commands for data processing and delivery. When a transfer command is received by the mule, all data is collected and compressed into a single zip file which is archived and prepared for delivery. DTN is then used to transfer the collected data to the station.

Captive Portal Wi-Fi signal discovered by end-user Mobile Phone (Android)

Captive Portal, allowing users to identify and send messages

Experimental file structure showing the end-user messages packaged

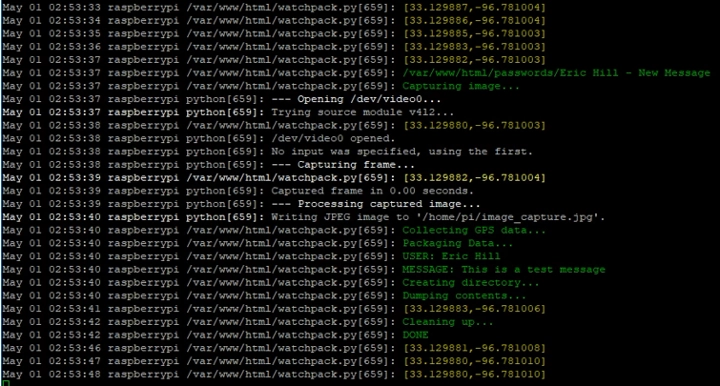

The onboard code is split into several services that run simultaneously. The watchpack service monitors incoming messages/data from the captive portal and constantly formats updated GPS information into the necessary types. When a new message is received from the portal, the service triggers the onboard camera to take a landscape photo, attaches a timestamp, and builds a file containing the photo, message, GPS information, and sender information. A logging system is built in that allows an admin user to monitor live GPS updates and incoming messages.

Monitoring of watchpack service

The second background service is our ionlistener service. This service begins by spinning up an DTN ION instance that covers the IP range that is provided by the hardware. ION is setup so that data/commands can be sent to the Mule. When a download command is received by the Mule, it is packaged with authentication as well as a returning DTN endpoint ID that the collected data will be transferred to. All available data from the Mule's flight path is packaged into a zip file, archived, and transmitted. Once the receipt has been confirmed, the Mule is set to allow for additional communications.

Ionlistener setup and data exchange

Test Results

End-to-end tests have been successfully performed to demonstrate the functionality of the system. As it would be in a real-life scenario, the Mule hardware is disconnected from all Internet networks and generates a Wi-Fi signal with a custom built-in captive portal that functions as the gateway for user and emergency response communications.

The flight path of the Mule can be simulated by taking the hardware package in a car and driving out of range of the emergency response network. Once at an appropriate distance, a user connects to the network and is presented with a captive portal that allows them to trigger the functional pieces of the drone (camera, GPS, packaging, etc.) by inputting their name and message to be delivered to emergency services. Having done this, the user data has been stored on the mule permanently in the file system. A Mule returning to the base station can be represented by simply driving the hardware back in range of the Emergency Response network.

Another DTN (ION) instance is run on the Emergency Response network so that it can send the download command to the Mule. After sending the download command along with credentials and return the DTN Endpoint ID via a text file, the incoming data bundles can be received. Once received, all the images and data can be unzipped and viewed on the Emergency Response network computer, enabling end-user communications in case of a complete network disruption.

Limitations and Next Steps

Several challenges were faced during the development and experimentation process. Some of these limitations come from the nature of the scenario, for example we do not expect for users in the ground to have a custom application or device already installed as this can compromise connectivity to the network, preventing adoption, or potentially increasing costs in hardware and preparation. For this reason, the captive portal is the default way to establish communications between mobile devices and the mule. However, in most operating systems captive portals are only meant to act as an authenticator for the connection, and not a full-on web browser so features are limited. Depending on the OS, captive portal hyperlinks might not work, and if you are to leave the captive portal (e.g. to check on your notes app or settings app) pulling up the captive portal might require the user to disconnect and connect to the network to trigger its appearance again (which is not always an obvious solution). A solution to this challenge is to guide the user to trigger the captive portal interface through the native browser by going to www.abcd.com, which we expect to not be cached on the user's device and bring up the captive portal on the native browser, allowing for the interface to function properly. Even then, the captive portal does not allow for ad-hoc DTN connectivity between devices (as end users do not act as DTN nodes themselves. To allow for DTN communications between end users, an application can be downloaded through the captive portal which provides full functionality (photo, file, and message sharing, and DTN based ad-hoc communications), as well as a persistent interface.

This brings the next challenge, Android devices allow users to download applications from the Internet if the user grants permission, but this is not the case for iOS. For this reason, we have chosen to provide basic communication capabilities through the captive portal and give android users the option to download a native communications application (not available yet).

Lastly, we had to work around networking issues due to the static nature of ION. DTN nodes have a unique ID (Known as an Endpoint ID), which ION then matches to the node's IP address. In our case the IP address of each mobile device is 'decided' at the time of connection, since if the mule has never seen a certain mobile phone before, it cannot assign it to a predetermined address. Our solution was to pre-assign a block of 50 private IP addresses to 50 IPN EIDs as part of the contact plan of the mule (this number can be changed). Knowing the range of IP addresses the mule's Wi-Fi module will give connecting devices, we can link e.g. 192.168.0.1 -> EID 1, 192.0.0.2 -> EID 2, and so on. Once a mobile device connects to the mule, it will get assigned an IP and EID, which will be recorded and assigned the same way during the next connection.

For now, mobile devices only connect to the mule (and not to each other), making the described solution work for the current use case. However, if ad-hoc communications were to be supported between end users, both mobile devices and the mule would need to assign the same EIDs to each node, requiring a different process where DTN nodes already have a predefined EID (that the mule knows about) before connectivity occurs.

However, to reach this problem we need to address the greatest limitation to this project, bringing true DTN capabilities to mobile nodes themselves. Previous versions of ION (circa 2016) were successfully ported to Android, however due to the mobile industry moving at lightning speed those applications are long outdated. A current application would need frequent maintenance and the ability to support many different Android versions to make the solution scalable to disaster scenarios (any device works - or at least a majority).

Conclusion

Communications are critical in the face of a major disaster, helping survivors and first responders to collect critical information necessary to assist. The use of DTN technologies open great possibilities to offer emergency response communication services in case of major disaster scenarios, providing a bridge for users to communicate while more capable networks are reinstated.

In this project, we created an experimental network in which users with mobile phones are capable to connect to a Captive Portal via Wi-Fi to exchange critical information with an emergency response system using a DTN-enabled Mule.